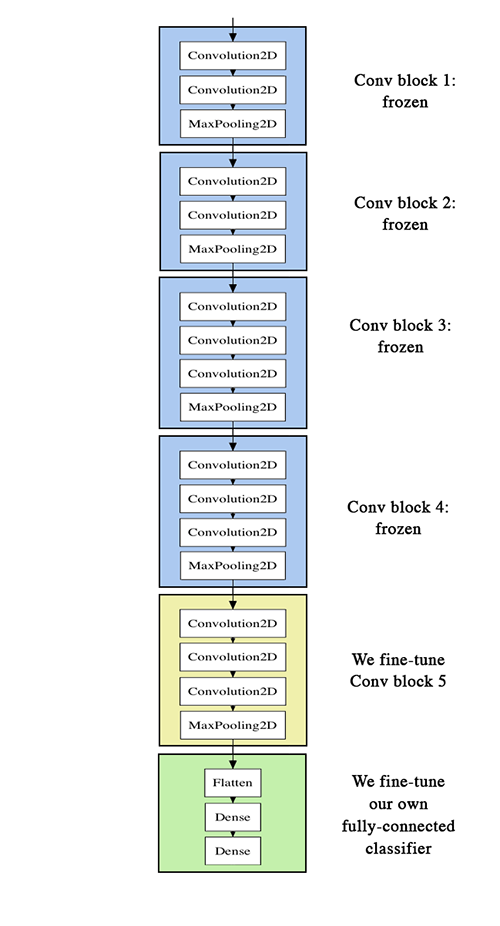

Model: "vgg16"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) [(None, 150, 150, 3)] 0

block1_conv1 (Conv2D) (None, 150, 150, 64) 1792

block1_conv2 (Conv2D) (None, 150, 150, 64) 36928

block1_pool (MaxPooling2D) (None, 75, 75, 64) 0

block2_conv1 (Conv2D) (None, 75, 75, 128) 73856

block2_conv2 (Conv2D) (None, 75, 75, 128) 147584

block2_pool (MaxPooling2D) (None, 37, 37, 128) 0

block3_conv1 (Conv2D) (None, 37, 37, 256) 295168

block3_conv2 (Conv2D) (None, 37, 37, 256) 590080

block3_conv3 (Conv2D) (None, 37, 37, 256) 590080

block3_pool (MaxPooling2D) (None, 18, 18, 256) 0

block4_conv1 (Conv2D) (None, 18, 18, 512) 1180160

block4_conv2 (Conv2D) (None, 18, 18, 512) 2359808

block4_conv3 (Conv2D) (None, 18, 18, 512) 2359808

block4_pool (MaxPooling2D) (None, 9, 9, 512) 0

block5_conv1 (Conv2D) (None, 9, 9, 512) 2359808

block5_conv2 (Conv2D) (None, 9, 9, 512) 2359808

block5_conv3 (Conv2D) (None, 9, 9, 512) 2359808

block5_pool (MaxPooling2D) (None, 4, 4, 512) 0

=================================================================

Total params: 14,714,688

Trainable params: 0

Non-trainable params: 14,714,688

_________________________________________________________________